Dispatches from @Garyd, your host and Unhacker - on tech, infosec, art, science and making.

Monday, May 18, 2015

Sunday, March 15, 2015

Priority Human Interrupt: A Robotic Imperative?

[This article proposes, by way of an oversimplified fictional UN resolution, a framework guaranteeing human safety and sovereignty in an age of autonomous robotics. At the end, I discuss it in plain language.]

This resolution acknowledges the basic rights, safety, and sovereignty of humans as they relate to advanced technologies including but not limited to autonomous robots. In the interest of protecting those rights, participating members agree to observe and enforce a "Priority Human Interrupt Resolution" (PHIR).

Owing to the matter's technical nature, the focus of PHIR is an architecture, systems and protocols for compliant solutions in the engineering and commercial sectors.

The remainder of this document describes that architecture and its application.

These scenarios can put humans at great risk, physical and otherwise. Designed as they are under the constraints of economy and efficiency, these systems make no effort to protect humans from these risks.

PHIR-compliant technologies are designed in a manner prioritizing the basic rights and sovereignty of humans, above all other programming or directives.

Systems and devices which are compliant have, at all times during operation, the ability to recognize a "priority human interrupt" - and to respond in the safest possible manner.

These capabilities are to be implemented in distinct and independent computational, electronic and mechanical channels, such that failure of the primary ("host") device can not impede compliance.

The diagram at left illustrates key aspects of the PHIR architeture.

Each of the following situations must initiate a Priority Human Interrupt:

A facial recognition system capable of correlating image data to human individual's identity must be able to detect the PHIR Opt-Out Signal. On receipt of the signal, the system must immediately discard any data collected, visual or otherwise, correlated to the signal source. Additionally, facilities must be made available for any person to validate the proper handling of this operation, and to be shown evidence for it.

Wedding Photography Aerial Drone

Whether operated privately, commercially, or autonomously, all mobile robotic devices capable of violating the safety, privacy or sovereignty of humans are to be held to the strictest PHIR compliance. A privately-operated aerial drone for wedding photographs, for example, must comply both PHIR Opt-Out requirements, as well as with all Basic Requirements.

Autonomous Traffic Data Collection Robot

A mobile, autonomous device exposed to the public at large and not under the operation of a human must adhere to the strictest PHIR compliance. Especially important in such cases are the Physical, Verbal and Vulnerable requirements.

First-off: No, I'm not one of these "robots are here to kill us" nuts (are they?). Actually I'm certainly what you would call an amateur robotics enthusiast,having built plenty myself.

I'm also knee-deep in Genetic Algorithms, Evolutionary Computing and the other techniques behind this newest generation of "AI" driving cars, kicking your ass at video games and generally freaking everyone out.

I'm more likely to be accused of ushering-in a techno-apocalypse.

But hacking on this for a decade does give one perspective that probably isn't shared by the uninitiated. I won't go as far as Elan, yet - but he is correct about the speed at which autonomous systems can advance, all on their own.

Evolutionary Computing techniques don't work like the traditional machines you are accustomed to: They "learn" (sort of - close enough, in any case), and do so very, very quickly.

You may not be very impressed by an algorithm that beats a video game. But put in charge of an autonomous (possibly armed) drone, the effects will be devastating. You and I are much easier to track and fire upon than most video game targets.

You will not be able to evade a drone piloted by genetic algorithm. It will have simulated your next move millions of times before your nerves even signal your legs to run. It will have other weaknesses, yes - but not the sort that favor a 6-year old girl or 85-year old man, running from a malfunctioning surveillance drone gone amok.

I could (probably should) talk about this lots more, but I want to move on - so let's just assume that 20 years hence we'll have fast-learning, possibly armed drones and autonomous devices running about the streets.

But the thing that has always struck me as insane about this possible future is that, as advanced as these machines have already gotten, they seem completely incapable of relating to humans in the simplest terms.

I mean that literally - the simplest things are what our current machines are lacking. I don't really think it's important yet for machines to parse our speech to know what kind of tea we want. I'd rather they first mastered simply knowing that you are screaming at it, and it needs to stop driving over your leg.

This robot, for example, retains its upright position even when bombarded by basketballs. My question is this: Is that a good thing? Is it wise to make such a thing without precautions protecting, you know - us?

How have we even started down the engineering path to machines that are capable autonomous action, but not of the simplest civility one would demand from, say, a domesticated animal?

Thinking on this for some years, I eventually came-up with the "Priority Human Interrupt" - the principle that automated systems must be capable of prioritizing basic human sovereignty and safety.

Technologically, it is a simple matter - trivial, in fact. In many scenarios $10-50 USD in electronics is capable of making these determinations, and cutting power or otherwise overriding an autonomous "host" device. The rough sketch I included here would cost about $100, but that's cobbled from commodity parts. A real electronics engineer could cram it into $30.

Given that is the case, should it be acceptable for a flying delivery drone, Internet-controlled garage door, or even an escalator to not know that a human being is being violated? If a human is beating on a machine, screaming at it - should it not know about this, and stop? This seems an increasingly serious gap in our traditional engineering approach.

Maybe it is a bit early for a PHIR movement. At the moment, most of these devices are far more vulnerable than we fear. I can't think of a single consumer-grade aerial drone, for instance, that could withstand an attack of bed-sheet-and-garden-hose.

Yes, I just now invented that countermeasure. See how formidable humans are? We are clever, sneaky, and sometimes just plain unpredictable. For the moment, at least, 200 thousand years of Evolution have granted us the upper hand.

How long that will last, however, remains to be seen.

United Nations Document #86065-63

Priority Human Interrupt Resolution

Enacted March 15, 2020This resolution acknowledges the basic rights, safety, and sovereignty of humans as they relate to advanced technologies including but not limited to autonomous robots. In the interest of protecting those rights, participating members agree to observe and enforce a "Priority Human Interrupt Resolution" (PHIR).

Owing to the matter's technical nature, the focus of PHIR is an architecture, systems and protocols for compliant solutions in the engineering and commercial sectors.

The remainder of this document describes that architecture and its application.

PHIR Basic Proposition

Advanced technologies including but not limited to autonomous robotics can fail, or be operated intentionally or otherwise in such a manner as to infringe on basic human rights and safety. Under these conditions, such systems can be unable to halt the offending operation on their own accord.These scenarios can put humans at great risk, physical and otherwise. Designed as they are under the constraints of economy and efficiency, these systems make no effort to protect humans from these risks.

PHIR-compliant technologies are designed in a manner prioritizing the basic rights and sovereignty of humans, above all other programming or directives.

|

| Simplified PHIR Architecture |

These capabilities are to be implemented in distinct and independent computational, electronic and mechanical channels, such that failure of the primary ("host") device can not impede compliance.

The diagram at left illustrates key aspects of the PHIR architeture.

PHIR Basic Requirements

The specific requirements for PHIR systems are presented as situations which call for immediate priority human interrupt. Compliant devices must detect these situations, and respond in the safest possible manner by immediately halting all operations, or turning themselves off.Each of the following situations must initiate a Priority Human Interrupt:

- Physical: The device must detect a severe physical contact initiated by a human. These should include slaps, punches, kicks, tackles, tipping, and rocking.

- Projectile: The device must detect projectiles launched at it by humans. These should include such things as bullets, rocks, bricks, mud, and dirt as well as domestic items such as blankets or clothing.

- Weapon: The device must detect violent contact with a inorganic material wielded by a human. These should include sticks, poles, clubs or bats, shovels and the like.

- Verbal: The device must detect distressed, fearful or aggressive speech directed at it by a human, regardless of the language or context. These should include shouts, sustained yelling or screaming, and repeated commands of increasing volume.

- Vulnerable: The device must detect humans under the age of 6 years, or weight of 34kg, and cease active operation of components with external physical surfaces. In the specific case of autonomous devices with mobile capability, this requires maintaining either a minimum distance of 15 feet, or a grounded inert position for the duration of the contact.

PHIR Opt-Out Requirements

Additionally, the following "Opt-Out" requirements apply to any device which collects or processes external data - such as visual, audio or location information - regarding any human, from any sensor that has not been explicitly granted permission to do so by that human.

Opt-Out requirements apply particularly to passive devices used in the consumer sector such as product scanners, point-of-sale systems, and image recognition systems. Individuals who wish to use their Opt-Out rights generate the PHIR Signal (described below).

Compliant devices must detect the PHIR signal, and observe the following restrictions with respect to data regarding the signal source:

- Anonymous: No identifying data is to be stored or processed.

- Invisible: No visual data, including photographic, is to be stored or processed.

- Mobile: No positional data is to be collected, stored or processed.

- Silent: No audio data is to be collected, stored or processed.

- Null: No digital data, such as financial data or product selection is to be collected.

PHIR Opt-Out Signal

To aid in the implementation of the opt-out requirements, an FM radio signal is to be allocated for the PHIR Opt-Out Signal. Compliant devices are required to detect this signal, and enforce the opt-out program in full.

Open-source circuits designs and software are to be made available, and citizens are to be permitted without license or authorization to generate the signal by any means available to them.

Illustrative Examples

Next-Generation Washing Machine

A mechanical consumer device such as a washing machine must include resistive and shock-detection circuits capable of recognizing repeated, forceful physical contact initiated by a human, and treating that as a priority human interrupt. It must respond to this signal by immediately ceasing all mechanical operations, and breaking all electrical circuits.

Point of Sale Facial Recognition SystemA facial recognition system capable of correlating image data to human individual's identity must be able to detect the PHIR Opt-Out Signal. On receipt of the signal, the system must immediately discard any data collected, visual or otherwise, correlated to the signal source. Additionally, facilities must be made available for any person to validate the proper handling of this operation, and to be shown evidence for it.

Wedding Photography Aerial Drone

Whether operated privately, commercially, or autonomously, all mobile robotic devices capable of violating the safety, privacy or sovereignty of humans are to be held to the strictest PHIR compliance. A privately-operated aerial drone for wedding photographs, for example, must comply both PHIR Opt-Out requirements, as well as with all Basic Requirements.

Autonomous Traffic Data Collection Robot

A mobile, autonomous device exposed to the public at large and not under the operation of a human must adhere to the strictest PHIR compliance. Especially important in such cases are the Physical, Verbal and Vulnerable requirements.

Discussion

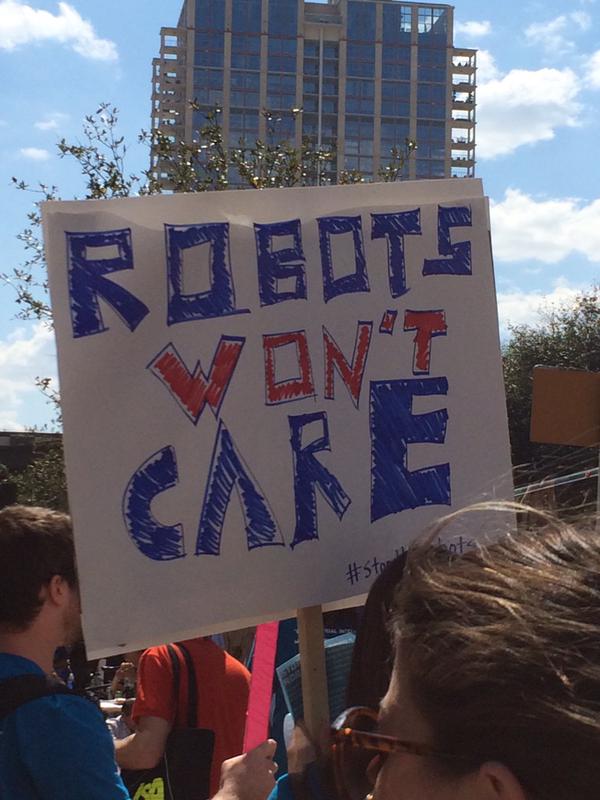

Okay, end scene. Now straight up, what am I talking about? |

| Yeah but neither do most humans |

I'm also knee-deep in Genetic Algorithms, Evolutionary Computing and the other techniques behind this newest generation of "AI" driving cars, kicking your ass at video games and generally freaking everyone out.

I'm more likely to be accused of ushering-in a techno-apocalypse.

But hacking on this for a decade does give one perspective that probably isn't shared by the uninitiated. I won't go as far as Elan, yet - but he is correct about the speed at which autonomous systems can advance, all on their own.

Evolutionary Computing techniques don't work like the traditional machines you are accustomed to: They "learn" (sort of - close enough, in any case), and do so very, very quickly.

You may not be very impressed by an algorithm that beats a video game. But put in charge of an autonomous (possibly armed) drone, the effects will be devastating. You and I are much easier to track and fire upon than most video game targets.

|

| Has already beaten my high score |

I could (probably should) talk about this lots more, but I want to move on - so let's just assume that 20 years hence we'll have fast-learning, possibly armed drones and autonomous devices running about the streets.

But the thing that has always struck me as insane about this possible future is that, as advanced as these machines have already gotten, they seem completely incapable of relating to humans in the simplest terms.

I mean that literally - the simplest things are what our current machines are lacking. I don't really think it's important yet for machines to parse our speech to know what kind of tea we want. I'd rather they first mastered simply knowing that you are screaming at it, and it needs to stop driving over your leg.

This robot, for example, retains its upright position even when bombarded by basketballs. My question is this: Is that a good thing? Is it wise to make such a thing without precautions protecting, you know - us?

|

| Definitely not PHIR compliant |

Thinking on this for some years, I eventually came-up with the "Priority Human Interrupt" - the principle that automated systems must be capable of prioritizing basic human sovereignty and safety.

Technologically, it is a simple matter - trivial, in fact. In many scenarios $10-50 USD in electronics is capable of making these determinations, and cutting power or otherwise overriding an autonomous "host" device. The rough sketch I included here would cost about $100, but that's cobbled from commodity parts. A real electronics engineer could cram it into $30.

Maybe it is a bit early for a PHIR movement. At the moment, most of these devices are far more vulnerable than we fear. I can't think of a single consumer-grade aerial drone, for instance, that could withstand an attack of bed-sheet-and-garden-hose.

Yes, I just now invented that countermeasure. See how formidable humans are? We are clever, sneaky, and sometimes just plain unpredictable. For the moment, at least, 200 thousand years of Evolution have granted us the upper hand.

How long that will last, however, remains to be seen.

Wednesday, March 11, 2015

Three Things Hackers Know That You Don't

[In this intermission in the "Personal Data Security" series, I discuss important key factors in the security landscape that the general public and media overlook.]

The Situation?

The situation is this: In an increasingly digital society, the forces of crime, espionage and anarchy have turned their attacks to the modern computing infrastructure.

Vendors and software developers simply can't keep up with the round-the-clock onslaught, as genius uber-hackers and cyber-terrorists write bewildering, masterful code that no one could have predicted.

Despite their best efforts, major businesses fall prey: Customer data is lost, all parties suffer equally. Curse you, demon horde!

Sorry, but - bullshit.

The Situation

These systems have never, ever been secure.

They were designed insecurely, because market pressures reward the release of the product far more than its security, the latter being basically invisible.

Increasing the security of an operating system, application, or device is mostly a matter of diligence - which means increased complexity. The code will be more complicated, as it checks for many "edge cases" that shouldn't ordinarily occur - but would be deadly if they did. All of this extra checking will slow it down. More time and effort must be invested to make it secure, but also still fast.

If life is good, you will never even know the difference. Because the condition is not supposed to happen, anyways. So that's a lot of extra resources invested in features that you will never know about.

Um, yeah...we're going to invest in that - and lag the competition to market. Real soon.

The Bottom Line is Still Tops

Do you get the "network inspection engine" part of the security product alone, or with the "security intelligence and monitoring" component - which doubles the cost. Can you live without the monitoring component? Probably. Should you? Clearly not. But of course the bottom line will usually win-out.

High encryption on everything, or just the "important" systems? Encryption slows everything down, which means you'll need more powerful computers (or routers, or VPN concentrators).

At every turn, the basic economies of business force almost everyone to the lowest common denominator - lest your competitor make widgets cheaper or faster than you.

Fat is in the Fire

The systems are then deployed insecurely, owing to much the same corner-cutting dynamic.

Sometimes systems are deployed insecurely against the vendor instructions or designs. But often, no additional ineptitude is required: Many of these products bring with them inherently insecure architectures.

For example, most corporate LANs are overflowing with local (usually Windows) communications traffic and capabilities. If you are at your work computer now, there's a very good chance it's offering a wealth of totally superfluous network services - for file sharing, for example - that you never use. What's that you say? Close those unused doors and instantly decrease the "attack surface" dramatically? Great idea! (1)

Unfortunately, years of experience has taught savvy field admins that it is far more expensive to turn such things on "as needed" - usually an in person, i.e. expensive process - than to simply turn on everything they even remotely think you might ever use.

But even if you've never used them - and never will - there are people who can and do put them to use (and most of them are on IRC).

The implication that these weaknesses in security (which have lead to every single compromise you've ever heard of) are somehow endemic to the nature of modern digital business, is provably false. One may as well claim that scissors are just inherently dangerous, and running with them had nothing to do with one's gushing stomach wound. "We are mere victims!" they cry.

Each of these services acts a lot like a door. Some may be locked. Some have little more than an "Employees Only" sign. Others can be tricked into opening, because (guess what) someone didn't write the code to check for that trick. Because (guess why) it wasn't profitable.

This proliferation of services permeates the internets: The computers inside them, their network infrastructure (2) - sometimes even the systems actually designed to defend this stuff.

Nearly everything, everywhere - running unnecessary services and other superfluous components that offer doors that don't even need to exist - out of ignorance, laziness, convenience, or financial expediency.

And you can add your phone to the list. (3)

Give Me Convenience Or Give Me Death

Security experts have long acknowledged that these compound factors make computing too variable to assume everything a machine is asked to do is actually safe.

The solution is limiting what users can do to "ordinary" tasks (say, computing a spreadsheet) and requiring special "super powers" to do crazy things like update Operating System components. Or install software that records your keystrokes.

Oh, hey, funny story: Remember when that IT geek came to install the new Office? Then you tried to print and it said you had to install a printer driver? You clicked "Ok" but it said you needed to be an administrator. Well, you caught geek boy (or girl) and they said they'd install it for you. But you're no baby! Man, you gave them what-for. You must have administrative authority over your machine, right? Make me god, techno gopher! (4)

There's a very good reason that they wanted you to run as a "powerless" user. A powerless user is a safe user. Well - safer, anyway. Unix took this lesson to heart early, though it still falls to users to live by it.

And did someone mention digital death? Well...that would be my department.

Securing the Eggshell

Now you've got a network full of insecure operating systems, dialed-down to their weakest setting so you don't complain to the admins - who have given up, and are trolling Craigslist and playing Fantasy Football.

But we can still secure the network itself, right? You've got firewalls, and intrusion prevention systems, and web application filtering doohickeys. We can watch for foul play, and release the hounds at the first sign of trouble!

But at what sign? There is so much activity in and around a modern corporate or service network, it's like trying to hear a whisper at a Metallica concert (5). The subtleties of these architectures are now such that it requires an expert - in your specific environment - and armed with some pretty fancy technology, to distinguish between what is "normal" and what is not.

In the best environments, you will find this person (or persons) in and around the Network Engineering, Network Security and/or Security teams. They'll have spent sufficient time and effort - the company will have invested those resources - to know the strengths and weaknesses of the digital "castle" they have vowed to protect. They'll have the tools they need to monitor the chaos, and keep order. They are the cyber-knights of this chapter of digital security. Their story is for another day.

But these capabilities are expensive, and - when employed properly - have no noticeable impact. That's right: If we do our job right, you won't even know we do anything useful, at all. How's that for a selling point, huh?

As you can imagine, this doesn't inspire businesses - who are in it for, you know, profit - to spend a lot of money on security. Or like - any. And if they haven't been hacked, why should they? As far as they know, there's no actual existing problem to be addressed, yet. You try convincing the board to spend half a million dollars on "security intelligence" which hopes to discover - best case scenario - nothing at all.

Of course, as should be obvious to all by now, you either have been - or will be - compromised. You just don't know which, yet.

Regulations to the Rescrew

Wait, did I mean rescue? No. I definitely did not.

The situation is further constrained by regulations that, sadly, are designed to help. While the threat of regulatory penalties (6) help to balance the demands of running a business at the lowest cost against the expense of good information security, it necessarily inspires companies to prioritize the regulatory demands first.

First in the security budget are all of the things required to ensure the company does not fail an audit for regulatory compliance. Auditing systems, software and services will be bought, and extensive records will be kept. All of which does absolutely nothing to help secure the network, users or systems - but does a great job protecting the company from liabilities when the inevitable incident occurs.

No surprise, then, that there's not usually much left - in love or resources - for the mission of "riding range" in these digital badlands. And as a result, they're just about as rowdy and lawless as the real deal.

Bake Until Crispy

So that's the situation you've got. Inside pretty much every corporate network, most university campuses, nearly every service or vendor network that has anything of value to anyone, and also at a horrifyingly large percentage of important networks and systems that you would really rather not even know about.

That's the rule of the day, in the digital world: Overflowing chaos, mismanagement, and the absolute minimum investment required to cover one's own ass - "never mind the people" as The Clash put it.

In this morass, brilliant but too-small in number, dedicated but under-powered white hats, grey hats, and unhackers (hi!) are losing a battle against expanding hordes of an organism similar to them genetically, but of a lineage criminal, economically disadvantaged, or nihilist. (7)

Mea Culpa, Ad Infinitum

And all of this hand-waving from the businesses, vendors and services begging forgiveness when they lose your data? It is totally - and completely - bullshit.

They knew precisely the risks of the systems, applications and configurations they chose. Because their security people told them. There was a 30-page report. There were instructions on what had to be done and how much it would cost. It was all delivered, signed-off as "accepted risks", and promptly lost behind a filing cabinet in a flooded basement.

They run fast and loose because it is cheap. And that's business - you can't really blame them, any more than you blame car makers for not adding seat belts to cars until customers demanded it.

Nor can you blame them any less.

And that is really where the conversation needs to go: Business must be held accountable to what amounts to a modern consumer's digital safety, with respect to their products and services. It is absolutely not sufficient, or acceptable, for them to simply fall on their swords and claim they were outsmarted by "genius hackers".

Because we know they were not: They were running naked in a world populated by flying, heat-seeking piranhas. And yeah, that's going to hurt. Unfortunately we are all bitten in the process.

Given that the capabilities exist to avoid these incidents, and they choose not to spend money they are not required to spend (big surprise), the now-accepted "oops, sorry" response is not even valid.

Simply put, it's just a cost-effective lie.

The current "digital contract" between business and consumer abandons you and your data to the tender mercies of the internets.

But this isn't new, is it? Isn't it really just the time-honored business classic known as the "screw you?" They cut the corners, you pay the price. I'm pretty sure that if we look at the history of capitalism, we'll find this situation doesn't usually resolve itself in the consumer's favor.

We're the ones that have to demand digital seat belts.

Why Three Things is Enough

That's really the only thing hackers know that others don't. The rest is just "geek trivia" - what code goes where, how some protocol works. None of these things, in their design, are supposed to be dangerous.

What hackers know that you don't is that the "information superhighway" (6) is constructed of eggshells, suspended on popsicle sticks and guarded by unarmed Red Shirts. (8)

The Hacker Secret is simply the reality of the chaos that you are already soaking in:

Humans Are Stupid - But Computers Are Still Stupider

Computers are, even now, still utterly stupid. Hard-wired to obey any instruction alleged to come from their user, in their current form they will probably always be hackable (10). Future computing paradigms may well change this, but the problem we face isn't subtle or academic in any way.

The current security "standard operating procedure" for businesses is so fast and loose, that were it applied to their financial dealings, they'd be in jail. Tomorrow.

This aspect doesn't get much coverage in most media reporting, but you wouldn't expect it to. The corporate victims, paying the technical debt incurred by their shortcuts, would certainly love you to believe there is nothing they could possibly have done any better, in your defense.

Just ask yourself how often, in your dealings with for-profit business, has that ever - ever - been true?

And it's not a glamorous tale to tell, either. A super hacker outsmarting a Fortune 500 has got to be a more romantic story than - oh, say - just plain getting screwed over by negligent business practices, again, for (big surprise) an extra buck on the bottom line?

No, hackers - by and large - are not super-powered cyber wizards. Not that they couldn't be. They just don't have to be.

Because the target is soft as cream cheese. And no one is even watching.

The Situation?

The situation is this: In an increasingly digital society, the forces of crime, espionage and anarchy have turned their attacks to the modern computing infrastructure.

Vendors and software developers simply can't keep up with the round-the-clock onslaught, as genius uber-hackers and cyber-terrorists write bewildering, masterful code that no one could have predicted.

Despite their best efforts, major businesses fall prey: Customer data is lost, all parties suffer equally. Curse you, demon horde!

Sorry, but - bullshit.

The Situation

These systems have never, ever been secure.

They were designed insecurely, because market pressures reward the release of the product far more than its security, the latter being basically invisible.

Increasing the security of an operating system, application, or device is mostly a matter of diligence - which means increased complexity. The code will be more complicated, as it checks for many "edge cases" that shouldn't ordinarily occur - but would be deadly if they did. All of this extra checking will slow it down. More time and effort must be invested to make it secure, but also still fast.

If life is good, you will never even know the difference. Because the condition is not supposed to happen, anyways. So that's a lot of extra resources invested in features that you will never know about.

Um, yeah...we're going to invest in that - and lag the competition to market. Real soon.

The Bottom Line is Still Tops

Do you get the "network inspection engine" part of the security product alone, or with the "security intelligence and monitoring" component - which doubles the cost. Can you live without the monitoring component? Probably. Should you? Clearly not. But of course the bottom line will usually win-out.

High encryption on everything, or just the "important" systems? Encryption slows everything down, which means you'll need more powerful computers (or routers, or VPN concentrators).

At every turn, the basic economies of business force almost everyone to the lowest common denominator - lest your competitor make widgets cheaper or faster than you.

Cutting close to the bone and running against an enemy of unknown size and force? Sounds like a heartbreak in the works, folks.

The systems are then deployed insecurely, owing to much the same corner-cutting dynamic.

Sometimes systems are deployed insecurely against the vendor instructions or designs. But often, no additional ineptitude is required: Many of these products bring with them inherently insecure architectures.

For example, most corporate LANs are overflowing with local (usually Windows) communications traffic and capabilities. If you are at your work computer now, there's a very good chance it's offering a wealth of totally superfluous network services - for file sharing, for example - that you never use. What's that you say? Close those unused doors and instantly decrease the "attack surface" dramatically? Great idea! (1)

Unfortunately, years of experience has taught savvy field admins that it is far more expensive to turn such things on "as needed" - usually an in person, i.e. expensive process - than to simply turn on everything they even remotely think you might ever use.

But even if you've never used them - and never will - there are people who can and do put them to use (and most of them are on IRC).

The implication that these weaknesses in security (which have lead to every single compromise you've ever heard of) are somehow endemic to the nature of modern digital business, is provably false. One may as well claim that scissors are just inherently dangerous, and running with them had nothing to do with one's gushing stomach wound. "We are mere victims!" they cry.

Each of these services acts a lot like a door. Some may be locked. Some have little more than an "Employees Only" sign. Others can be tricked into opening, because (guess what) someone didn't write the code to check for that trick. Because (guess why) it wasn't profitable.

|

| Absolutely typical internet stupidity. |

Nearly everything, everywhere - running unnecessary services and other superfluous components that offer doors that don't even need to exist - out of ignorance, laziness, convenience, or financial expediency.

And you can add your phone to the list. (3)

Give Me Convenience Or Give Me Death

Security experts have long acknowledged that these compound factors make computing too variable to assume everything a machine is asked to do is actually safe.

The solution is limiting what users can do to "ordinary" tasks (say, computing a spreadsheet) and requiring special "super powers" to do crazy things like update Operating System components. Or install software that records your keystrokes.

Oh, hey, funny story: Remember when that IT geek came to install the new Office? Then you tried to print and it said you had to install a printer driver? You clicked "Ok" but it said you needed to be an administrator. Well, you caught geek boy (or girl) and they said they'd install it for you. But you're no baby! Man, you gave them what-for. You must have administrative authority over your machine, right? Make me god, techno gopher! (4)

There's a very good reason that they wanted you to run as a "powerless" user. A powerless user is a safe user. Well - safer, anyway. Unix took this lesson to heart early, though it still falls to users to live by it.

And did someone mention digital death? Well...that would be my department.

Securing the Eggshell

Now you've got a network full of insecure operating systems, dialed-down to their weakest setting so you don't complain to the admins - who have given up, and are trolling Craigslist and playing Fantasy Football.

But we can still secure the network itself, right? You've got firewalls, and intrusion prevention systems, and web application filtering doohickeys. We can watch for foul play, and release the hounds at the first sign of trouble!

But at what sign? There is so much activity in and around a modern corporate or service network, it's like trying to hear a whisper at a Metallica concert (5). The subtleties of these architectures are now such that it requires an expert - in your specific environment - and armed with some pretty fancy technology, to distinguish between what is "normal" and what is not.

In the best environments, you will find this person (or persons) in and around the Network Engineering, Network Security and/or Security teams. They'll have spent sufficient time and effort - the company will have invested those resources - to know the strengths and weaknesses of the digital "castle" they have vowed to protect. They'll have the tools they need to monitor the chaos, and keep order. They are the cyber-knights of this chapter of digital security. Their story is for another day.

But these capabilities are expensive, and - when employed properly - have no noticeable impact. That's right: If we do our job right, you won't even know we do anything useful, at all. How's that for a selling point, huh?

As you can imagine, this doesn't inspire businesses - who are in it for, you know, profit - to spend a lot of money on security. Or like - any. And if they haven't been hacked, why should they? As far as they know, there's no actual existing problem to be addressed, yet. You try convincing the board to spend half a million dollars on "security intelligence" which hopes to discover - best case scenario - nothing at all.

Of course, as should be obvious to all by now, you either have been - or will be - compromised. You just don't know which, yet.

Regulations to the Rescrew

Wait, did I mean rescue? No. I definitely did not.

The situation is further constrained by regulations that, sadly, are designed to help. While the threat of regulatory penalties (6) help to balance the demands of running a business at the lowest cost against the expense of good information security, it necessarily inspires companies to prioritize the regulatory demands first.

First in the security budget are all of the things required to ensure the company does not fail an audit for regulatory compliance. Auditing systems, software and services will be bought, and extensive records will be kept. All of which does absolutely nothing to help secure the network, users or systems - but does a great job protecting the company from liabilities when the inevitable incident occurs.

No surprise, then, that there's not usually much left - in love or resources - for the mission of "riding range" in these digital badlands. And as a result, they're just about as rowdy and lawless as the real deal.

Bake Until Crispy

So that's the situation you've got. Inside pretty much every corporate network, most university campuses, nearly every service or vendor network that has anything of value to anyone, and also at a horrifyingly large percentage of important networks and systems that you would really rather not even know about.

|

| For our younger readers: The Clash |

In this morass, brilliant but too-small in number, dedicated but under-powered white hats, grey hats, and unhackers (hi!) are losing a battle against expanding hordes of an organism similar to them genetically, but of a lineage criminal, economically disadvantaged, or nihilist. (7)

Mea Culpa, Ad Infinitum

And all of this hand-waving from the businesses, vendors and services begging forgiveness when they lose your data? It is totally - and completely - bullshit.

They knew precisely the risks of the systems, applications and configurations they chose. Because their security people told them. There was a 30-page report. There were instructions on what had to be done and how much it would cost. It was all delivered, signed-off as "accepted risks", and promptly lost behind a filing cabinet in a flooded basement.

They run fast and loose because it is cheap. And that's business - you can't really blame them, any more than you blame car makers for not adding seat belts to cars until customers demanded it.

Nor can you blame them any less.

And that is really where the conversation needs to go: Business must be held accountable to what amounts to a modern consumer's digital safety, with respect to their products and services. It is absolutely not sufficient, or acceptable, for them to simply fall on their swords and claim they were outsmarted by "genius hackers".

Because we know they were not: They were running naked in a world populated by flying, heat-seeking piranhas. And yeah, that's going to hurt. Unfortunately we are all bitten in the process.

Given that the capabilities exist to avoid these incidents, and they choose not to spend money they are not required to spend (big surprise), the now-accepted "oops, sorry" response is not even valid.

Simply put, it's just a cost-effective lie.

The current "digital contract" between business and consumer abandons you and your data to the tender mercies of the internets.

But this isn't new, is it? Isn't it really just the time-honored business classic known as the "screw you?" They cut the corners, you pay the price. I'm pretty sure that if we look at the history of capitalism, we'll find this situation doesn't usually resolve itself in the consumer's favor.

We're the ones that have to demand digital seat belts.

That's really the only thing hackers know that others don't. The rest is just "geek trivia" - what code goes where, how some protocol works. None of these things, in their design, are supposed to be dangerous.

What hackers know that you don't is that the "information superhighway" (6) is constructed of eggshells, suspended on popsicle sticks and guarded by unarmed Red Shirts. (8)

The Hacker Secret is simply the reality of the chaos that you are already soaking in:

- Systems are not built as well as they could be, and code is not as secure as it could be, because those things cost money, and no one really sees the benefit. (9)

- It is all then deployed insecurely because it's easier and cheaper, and because you insist on installing your own printer drivers. Also Angry Birds.

- The people who could help, who are indeed your only defense, are negated by economic constraints, poorly equipped for the fight, and quickly being overpowered by sheer numbers.

Humans Are Stupid - But Computers Are Still Stupider

Computers are, even now, still utterly stupid. Hard-wired to obey any instruction alleged to come from their user, in their current form they will probably always be hackable (10). Future computing paradigms may well change this, but the problem we face isn't subtle or academic in any way.

The current security "standard operating procedure" for businesses is so fast and loose, that were it applied to their financial dealings, they'd be in jail. Tomorrow.

This aspect doesn't get much coverage in most media reporting, but you wouldn't expect it to. The corporate victims, paying the technical debt incurred by their shortcuts, would certainly love you to believe there is nothing they could possibly have done any better, in your defense.

Just ask yourself how often, in your dealings with for-profit business, has that ever - ever - been true?

And it's not a glamorous tale to tell, either. A super hacker outsmarting a Fortune 500 has got to be a more romantic story than - oh, say - just plain getting screwed over by negligent business practices, again, for (big surprise) an extra buck on the bottom line?

Because the target is soft as cream cheese. And no one is even watching.

Tuesday, January 27, 2015

Top 10 Personal Data Security Tips from a Hacker, # 10 - Cash

A Quick Disclaimer

Okay I'm really an unhacker, a subtle but important distinction we can cover later. For now, interpret it as meaning I have much of the insight of the hackers you love to fear - but am on your side.I will also articulate further what I mean by "Personal Data Security", a concept I hope you take to heart: That the conveniences of digital life come with serious risks, which warrant the same rigorous caution you grant a 3,000 pound vehicle, or table saw.

And that this responsibility, of keeping your digital fingers free of the blade, as it were - is yours alone. Criminals are to blame, to be certain - and layers of corporate bureaucracies are, ultimately, the guilty parties.

But these are still your fingers.

So lets kick this off with a bang, and probably start arguments right away by strongly recommending that you...

Tip #10: Use Cash

What?! Gary want's us all to be robbed in the streets, he's mad!No, I am not suggesting you waltz-around toting gangsta wads of cash - rather that you limit your risk, appropriately. Here, the risk is that your credit card or other financial account information could be captured. We'll leave the means of this "capture" unspecified, but will later explore in great technical detail how such things are accomplished.

Chances are extremely high that you do not live in an area where traditional muggings are common. If you do, you know better than I how much cash you can safely carry. But for most of you, the number of times daily that you are exposed to a "digital mugging" is much higher than for the real-world version.

So let's look at those exposures: When are they occurring? And are the risks being taken appropriate to what is being gained? This is how Corporate Information Security thinks, and it is time you start thinking the same way about your own Personal Data Security.

If you look at transactions for which you use your digital credentials (credit cards, debit cards) you will probably find that most are for fairly small-ticket items: A cappuccino and croissant, $6. A hot-dog at lunch, $5. A beer and chips after work, $8. Would it really be a significant risk to carry $20 or $40 in your pocket?

Learning to Think About Risk Exposure

And here's the important bit that has not been made widely known outside of the Information Security industry: Each of these transactions is exposing your credentials to potentially serious risk of capture.

How much risk? Each time you hand your card to a vendor, data necessary to place transactions against the account is processed by their systems. What does that mean, "their systems"? Well, it could mean a tightly-secured network, closely monitored 24/7 by security experts. Or it could mean a rusty Windows95 PC in the back office, poorly administered by the store owner, infected with colonies of malware.

So which is which? It can be tough to be sure, but we can draw solid conclusions from simple, empirical observations. The size of the vendor, for instance: It is unlikely that Joe's Hot Dogs can afford a team of specialists to secure their systems: Joe does it himself.

And how about Joe? Does he seem the computer-savvy sort who would know how to safely handle your transaction data? Maybe not.

This might be a good time to just buy your hot-dog with cash.

In It Together: Your Risk and Theirs

Another factor you should consider is the level of exposure to risk shared by the vendor. How motivated are they to secure your transaction data? It is not unusual for vendors to keep transaction details for days, months or forever. Your data will be at risk during the transaction, and possibly long afterward.Is Joe's exposure to this risk as high as yours? I'm sorry to tell you that it is not.

We're all familiar with the public announcements of compromises at big name vendors (HomeDepot, Target) - but what compromises occur with smaller vendors? We don't know, and there's a reason for that: Companies of a certain size are required by law (or other constraints) to disclose these incidents. That is not necessarily so with Joe's Hot Dogs.

With these factors in mind, it should be clear that each digital transaction - online or offline - is a new instance of risk exposure for your data. It should also be obvious that it is wise to limit the number of these instances, and one of the easiest ways is to replace low-ticket digital transactions with good old-fashioned cash.

You may also have drawn conclusions about which vendors you should trust with your data, and it should have something to do with the level of risk you and the vendor both share. This is smart, and is an aspect we will explore at length, later in this series.

About Personal Data Security

Okay so what is this "Personal Data Security" I keep going on about?Quite simply, I'm suggesting that you need to start thinking about your own personal data assets in the same way as corporations have for decades thought of theirs.

Modern businesses must expose themselves to serious security risks, in order to do business. They cannot circumvent the issue as easily as you and I. Risk is accepted as part of doing business.

But they make sure they understand what the risks are, what can be done about them, and - most importantly - the potential for financial loss in each case. This, by the way, is what unhackers do during the day.

From this, businesses make informed decisions about which risks are worth taking, and which are not. Using this "loss expectancy" insight, they determine how much effort should be invested in securing against a risk, and at what point a risk outweighs the potential benefits.

In your Personal Data Security too, risk cannot be completely avoided. But understanding this dynamic will tell you that the convenience of paying for a hot dog with a card is not sufficient to expose your account data to risks which could lead to serious losses.

Welcome to the Jungle

For the moment, digital commerce is a jungle: Until it is as secure as its real-world counterparts, modern consumers (that's you!) must learn to be aware of the risks involved, and make logical, informed decisions about when those risks are appropriate.Up Next in this Series...

Coming up (in no order): Devil You Know, Lose Your Stuff, Hedge Your Bets, Fancy Gadgets, Your Friend 7-11, Going Schizo, Uninstall It, and Fake Everything. Yes those are all real article titles.Sunday, January 25, 2015

New Tricks for an Old Blog?

I've been wanting to write on a few new topics, so this now ancient and disused blog will be taking a dramatic turn.

Stay tuned for new articles on . . .

Stay tuned for new articles on . . .

- Unhacking: That is to say, not getting hacked.

- Information Security: In general, that is.

- Making: This will be a nice place to write about works-in-progress, before they become articles on my main site

- Genetic Algorithms: A topic in which I'm increasingly active.

- Android: And Java and Perl and Linux, and other code stuffs.

And probably a new title.

You can probably ignore the ancient archive content, unless you're interested in what the Virtual Worlds industry looked like in 2009.

Next up: "Top 10 Personal Data Security Tips from a Hacker"

You can probably ignore the ancient archive content, unless you're interested in what the Virtual Worlds industry looked like in 2009.

Next up: "Top 10 Personal Data Security Tips from a Hacker"

Subscribe to:

Posts (Atom)