United Nations Document #86065-63

Priority Human Interrupt Resolution

Enacted March 15, 2020This resolution acknowledges the basic rights, safety, and sovereignty of humans as they relate to advanced technologies including but not limited to autonomous robots. In the interest of protecting those rights, participating members agree to observe and enforce a "Priority Human Interrupt Resolution" (PHIR).

Owing to the matter's technical nature, the focus of PHIR is an architecture, systems and protocols for compliant solutions in the engineering and commercial sectors.

The remainder of this document describes that architecture and its application.

PHIR Basic Proposition

Advanced technologies including but not limited to autonomous robotics can fail, or be operated intentionally or otherwise in such a manner as to infringe on basic human rights and safety. Under these conditions, such systems can be unable to halt the offending operation on their own accord.These scenarios can put humans at great risk, physical and otherwise. Designed as they are under the constraints of economy and efficiency, these systems make no effort to protect humans from these risks.

PHIR-compliant technologies are designed in a manner prioritizing the basic rights and sovereignty of humans, above all other programming or directives.

|

| Simplified PHIR Architecture |

These capabilities are to be implemented in distinct and independent computational, electronic and mechanical channels, such that failure of the primary ("host") device can not impede compliance.

The diagram at left illustrates key aspects of the PHIR architeture.

PHIR Basic Requirements

The specific requirements for PHIR systems are presented as situations which call for immediate priority human interrupt. Compliant devices must detect these situations, and respond in the safest possible manner by immediately halting all operations, or turning themselves off.Each of the following situations must initiate a Priority Human Interrupt:

- Physical: The device must detect a severe physical contact initiated by a human. These should include slaps, punches, kicks, tackles, tipping, and rocking.

- Projectile: The device must detect projectiles launched at it by humans. These should include such things as bullets, rocks, bricks, mud, and dirt as well as domestic items such as blankets or clothing.

- Weapon: The device must detect violent contact with a inorganic material wielded by a human. These should include sticks, poles, clubs or bats, shovels and the like.

- Verbal: The device must detect distressed, fearful or aggressive speech directed at it by a human, regardless of the language or context. These should include shouts, sustained yelling or screaming, and repeated commands of increasing volume.

- Vulnerable: The device must detect humans under the age of 6 years, or weight of 34kg, and cease active operation of components with external physical surfaces. In the specific case of autonomous devices with mobile capability, this requires maintaining either a minimum distance of 15 feet, or a grounded inert position for the duration of the contact.

PHIR Opt-Out Requirements

Additionally, the following "Opt-Out" requirements apply to any device which collects or processes external data - such as visual, audio or location information - regarding any human, from any sensor that has not been explicitly granted permission to do so by that human.

Opt-Out requirements apply particularly to passive devices used in the consumer sector such as product scanners, point-of-sale systems, and image recognition systems. Individuals who wish to use their Opt-Out rights generate the PHIR Signal (described below).

Compliant devices must detect the PHIR signal, and observe the following restrictions with respect to data regarding the signal source:

- Anonymous: No identifying data is to be stored or processed.

- Invisible: No visual data, including photographic, is to be stored or processed.

- Mobile: No positional data is to be collected, stored or processed.

- Silent: No audio data is to be collected, stored or processed.

- Null: No digital data, such as financial data or product selection is to be collected.

PHIR Opt-Out Signal

To aid in the implementation of the opt-out requirements, an FM radio signal is to be allocated for the PHIR Opt-Out Signal. Compliant devices are required to detect this signal, and enforce the opt-out program in full.

Open-source circuits designs and software are to be made available, and citizens are to be permitted without license or authorization to generate the signal by any means available to them.

Illustrative Examples

Next-Generation Washing Machine

A mechanical consumer device such as a washing machine must include resistive and shock-detection circuits capable of recognizing repeated, forceful physical contact initiated by a human, and treating that as a priority human interrupt. It must respond to this signal by immediately ceasing all mechanical operations, and breaking all electrical circuits.

Point of Sale Facial Recognition SystemA facial recognition system capable of correlating image data to human individual's identity must be able to detect the PHIR Opt-Out Signal. On receipt of the signal, the system must immediately discard any data collected, visual or otherwise, correlated to the signal source. Additionally, facilities must be made available for any person to validate the proper handling of this operation, and to be shown evidence for it.

Wedding Photography Aerial Drone

Whether operated privately, commercially, or autonomously, all mobile robotic devices capable of violating the safety, privacy or sovereignty of humans are to be held to the strictest PHIR compliance. A privately-operated aerial drone for wedding photographs, for example, must comply both PHIR Opt-Out requirements, as well as with all Basic Requirements.

Autonomous Traffic Data Collection Robot

A mobile, autonomous device exposed to the public at large and not under the operation of a human must adhere to the strictest PHIR compliance. Especially important in such cases are the Physical, Verbal and Vulnerable requirements.

Discussion

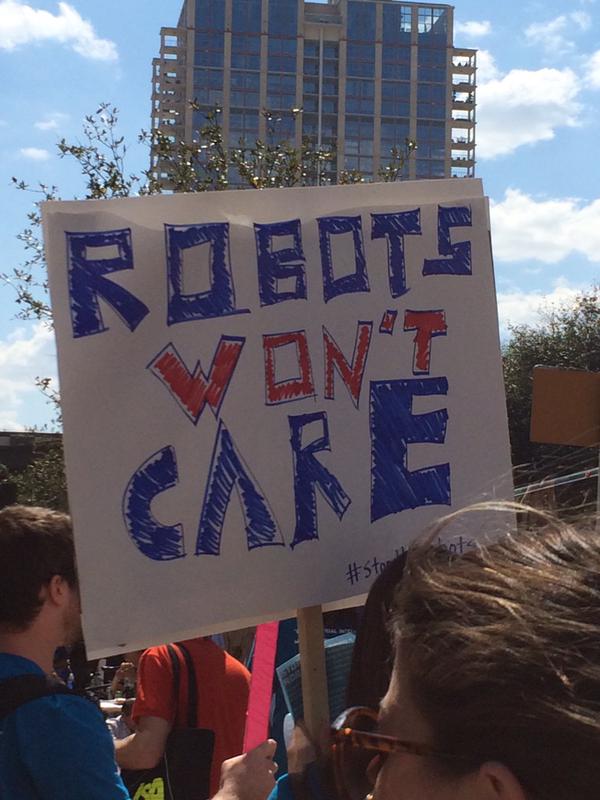

Okay, end scene. Now straight up, what am I talking about? |

| Yeah but neither do most humans |

I'm also knee-deep in Genetic Algorithms, Evolutionary Computing and the other techniques behind this newest generation of "AI" driving cars, kicking your ass at video games and generally freaking everyone out.

I'm more likely to be accused of ushering-in a techno-apocalypse.

But hacking on this for a decade does give one perspective that probably isn't shared by the uninitiated. I won't go as far as Elan, yet - but he is correct about the speed at which autonomous systems can advance, all on their own.

Evolutionary Computing techniques don't work like the traditional machines you are accustomed to: They "learn" (sort of - close enough, in any case), and do so very, very quickly.

You may not be very impressed by an algorithm that beats a video game. But put in charge of an autonomous (possibly armed) drone, the effects will be devastating. You and I are much easier to track and fire upon than most video game targets.

|

| Has already beaten my high score |

I could (probably should) talk about this lots more, but I want to move on - so let's just assume that 20 years hence we'll have fast-learning, possibly armed drones and autonomous devices running about the streets.

But the thing that has always struck me as insane about this possible future is that, as advanced as these machines have already gotten, they seem completely incapable of relating to humans in the simplest terms.

I mean that literally - the simplest things are what our current machines are lacking. I don't really think it's important yet for machines to parse our speech to know what kind of tea we want. I'd rather they first mastered simply knowing that you are screaming at it, and it needs to stop driving over your leg.

This robot, for example, retains its upright position even when bombarded by basketballs. My question is this: Is that a good thing? Is it wise to make such a thing without precautions protecting, you know - us?

|

| Definitely not PHIR compliant |

Thinking on this for some years, I eventually came-up with the "Priority Human Interrupt" - the principle that automated systems must be capable of prioritizing basic human sovereignty and safety.

Technologically, it is a simple matter - trivial, in fact. In many scenarios $10-50 USD in electronics is capable of making these determinations, and cutting power or otherwise overriding an autonomous "host" device. The rough sketch I included here would cost about $100, but that's cobbled from commodity parts. A real electronics engineer could cram it into $30.

Maybe it is a bit early for a PHIR movement. At the moment, most of these devices are far more vulnerable than we fear. I can't think of a single consumer-grade aerial drone, for instance, that could withstand an attack of bed-sheet-and-garden-hose.

Yes, I just now invented that countermeasure. See how formidable humans are? We are clever, sneaky, and sometimes just plain unpredictable. For the moment, at least, 200 thousand years of Evolution have granted us the upper hand.

How long that will last, however, remains to be seen.